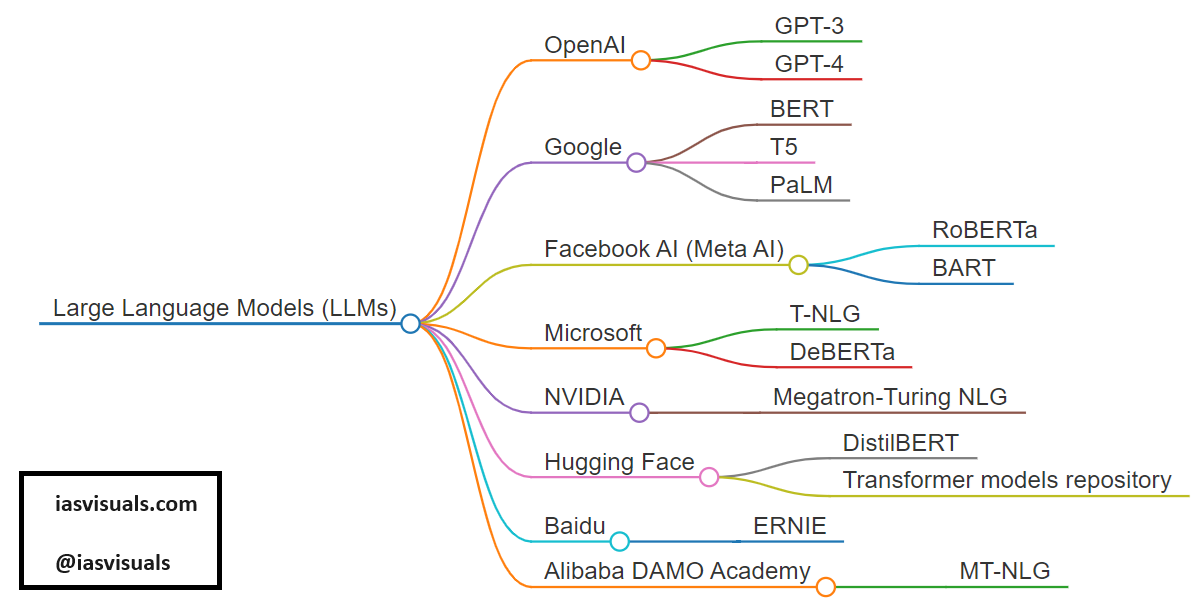

Some of the more prominent and widely recognized models across different organizations:

OpenAI:

- GPT-3: A powerful model known for its wide-ranging capabilities in generating human-like text.

- GPT-4: The latest iteration, known for its enhanced performance and larger training dataset.

Google:

- BERT (Bidirectional Encoder Representations from Transformers): Pioneered the technique of bidirectional training in language modeling.

- T5 (Text-To-Text Transfer Transformer): Converts all text-based language tasks into a unified text-to-text format.

- PaLM (Pathways Language Model): A very large model designed under Google’s Pathways system, known for its scalability and efficiency.

Facebook AI (Meta AI):

- RoBERTa (A Robustly Optimized BERT Pretraining Approach): An optimized version of BERT that improves training methodology.

- BART (Bidirectional and Auto-Regressive Transformers): Combines the benefits of BERT and GPT for both understanding and generation tasks.

Microsoft:

- T-NLG (Turing Natural Language Generation): A large-scale generative language model.

- DeBERTa (Decoding-enhanced BERT with Disentangled Attention): Improves upon BERT and RoBERTa by using a novel attention mechanism.

NVIDIA:

- Megatron-Turing NLG: One of the largest models built in collaboration with Microsoft, designed for powerful language generation.

Hugging Face:

- DistilBERT: A smaller, faster version of BERT designed for practical applications requiring less computational resources.

- Transformer models repository: Hosts a variety of community-driven models adapted for specific languages and tasks.

Baidu:

- ERNIE (Enhanced Representation through kNowledge Integration): Integrates knowledge graphs with language model training to improve performance.

Alibaba DAMO Academy:

- MT-NLG: A multilingual version of Megatron-Turing designed to handle tasks in multiple languages.

Each model has unique characteristics and is optimized for different types of language processing tasks.

Here’s a table summarizing some of the prominent large language models (LLMs) by their developers and key features:

| Model | Developer | Key Features |

|---|---|---|

| GPT-3 | OpenAI | Known for generating human-like text, with a broad range of applications. |

| GPT-4 | OpenAI | Enhanced version of GPT-3 with improved context awareness and detail in responses. |

| BERT | Pioneered bidirectional training, improving performance on a variety of language tasks. | |

| T5 | Treats all text-based tasks as text-to-text problems, simplifying the training approach. | |

| PaLM | Very large model under Google’s Pathways, designed for scalability and efficiency. | |

| RoBERTa | Facebook AI (Meta AI) | An optimized version of BERT that tweaks training processes for better performance. |

| BART | Facebook AI (Meta AI) | Combines the benefits of BERT’s and GPT’s architectures, suitable for both understanding and generation. |

| T-NLG | Microsoft | Large-scale language model for powerful language generation capabilities. |

| DeBERTa | Microsoft | Introduces a disentangled attention mechanism to improve upon BERT and RoBERTa. |

| Megatron-Turing NLG | NVIDIA | One of the largest models, designed for advanced language generation. |

| DistilBERT | Hugging Face | A lighter, faster version of BERT suitable for less resource-intensive applications. |

| ERNIE | Baidu | Enhances language representation by integrating structured world knowledge into training. |

| MT-NLG | Alibaba DAMO Academy | A multilingual language model that extends capabilities across various languages. |

This table showcases a variety of models, each with unique advantages and intended use cases, reflecting the diverse approaches in the field of natural language processing and artificial intelligence.